Over the past couple of days, I’ve been experimenting with deep learning to model Bitcoin price movements. Starting from raw price data, I went through several iterations of modeling approaches — each step bringing me a bit closer to understanding the patterns (or lack thereof) hidden in the chaos of cryptocurrency markets.

Step 1: The Dataset

I began with historical Bitcoin price data (from January 2024 through August 2025) downloaded from Investing.com.

Each row included the daily Open, High, Low, Close (Price), Volume, and Change %.

Sample format:

"Date","Price","Open","High","Low","Vol.","Change %"

"08/21/2025","112,481.1","114,275.0","114,776.3","112,023.0","43.00K","-1.57%"

"08/20/2025","114,275.0","112,878.1","114,616.7","112,409.4","54.63K","1.24%"

"08/19/2025","112,880.3","116,218.9","116,729.2","112,750.9","62.15K","-2.86%"Before training, I cleaned the data:

- Removed commas from numbers (e.g.

"112,481.1"→112481.1). - Converted volumes like

"43.00K"or"1.2M"into raw integers. - Converted percentages (e.g.

"-1.57%") into floats.

I then sorted the data chronologically and prepared it for modeling.

Step 2: Pure LSTM

My first model was a straightforward LSTM forecaster:

class LSTMForecaster(nn.Module):

def __init__(self, input_size, hidden_size=64, num_layers=2, dropout=0.2):

super().__init__()

self.lstm = nn.LSTM(

input_size=input_size,

hidden_size=hidden_size,

num_layers=num_layers,

batch_first=True,

dropout=dropout if num_layers > 1 else 0.0,

)

self.fc = nn.Linear(hidden_size, 1)I trained it on all available data, holding out the last week (7 days) as a test set.

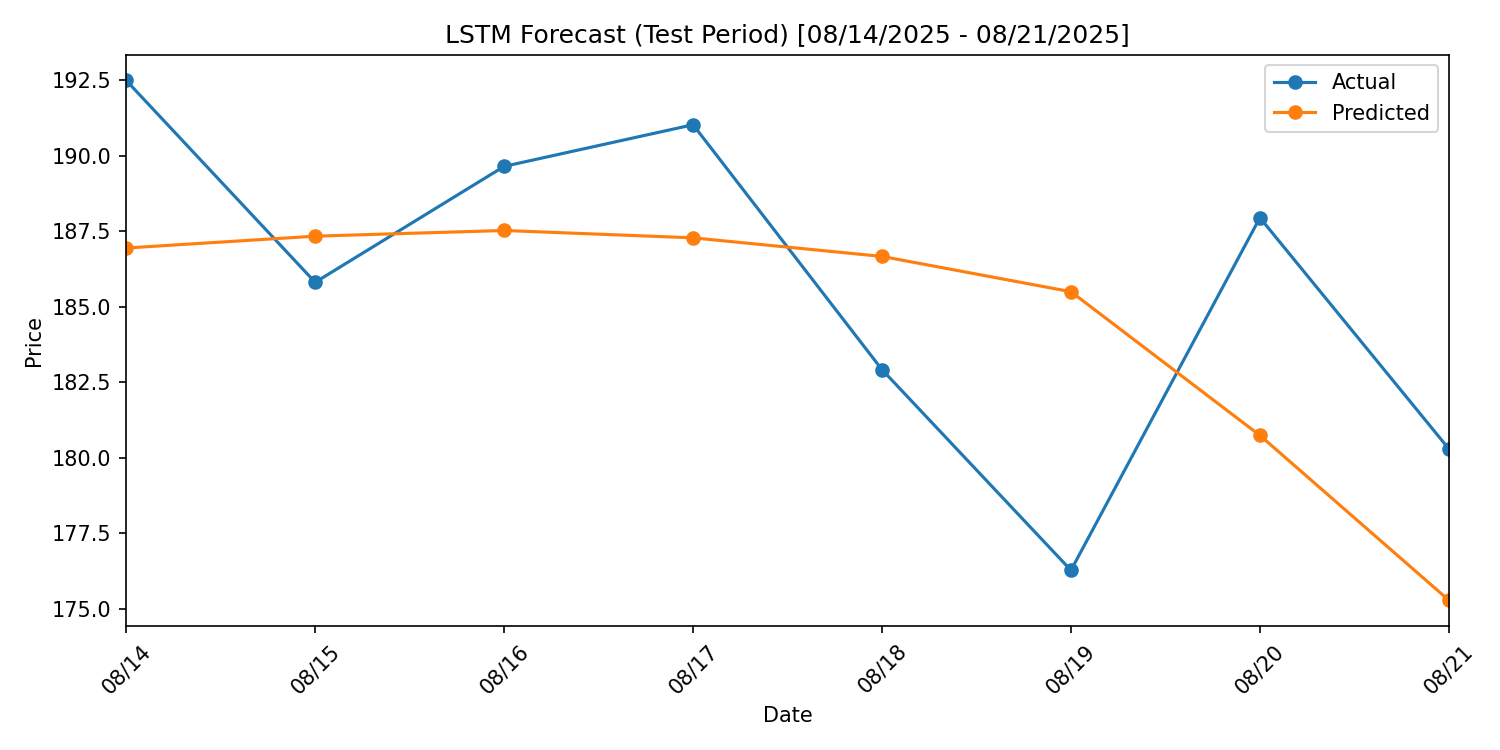

The model produced predictions, but they were too smooth. The LSTM captured long-term averages but missed shorter-term volatility.

Result: directionally useful, but far from precise.

Step 3: Adding CNN Layers

After some research, I learned that combining CNNs with LSTMs can help:

- CNN layers extract short-term local patterns.

- LSTMs then capture longer-term dependencies.

So I built a CNN-LSTM hybrid:

class CNNLSTMForecaster(nn.Module):

def __init__(self, input_size, conv_channels=32, kernel_size=3,

lstm_hidden=64, lstm_layers=2, dropout=0.2):

super().__init__()

pad = kernel_size // 2

self.conv1 = nn.Conv1d(

in_channels=input_size,

out_channels=conv_channels,

kernel_size=kernel_size,

padding=pad,

)

self.bn1 = nn.BatchNorm1d(conv_channels)

self.act = nn.ReLU()

self.do = nn.Dropout(dropout)

self.lstm = nn.LSTM(

input_size=conv_channels,

hidden_size=lstm_hidden,

num_layers=lstm_layers,

batch_first=True,

dropout=dropout if lstm_layers > 1 else 0.0,

)

self.fc = nn.Linear(lstm_hidden, 1)

def forward(self, x):

x = x.permute(0, 2, 1) # [B,T,F] → [B,F,T]

x = self.conv1(x)

x = self.bn1(x)

x = self.act(x)

x = self.do(x)

x = x.permute(0, 2, 1) # [B,F,T] → [B,T,F]

out, _ = self.lstm(x)

return self.fc(out[:, -1, :])

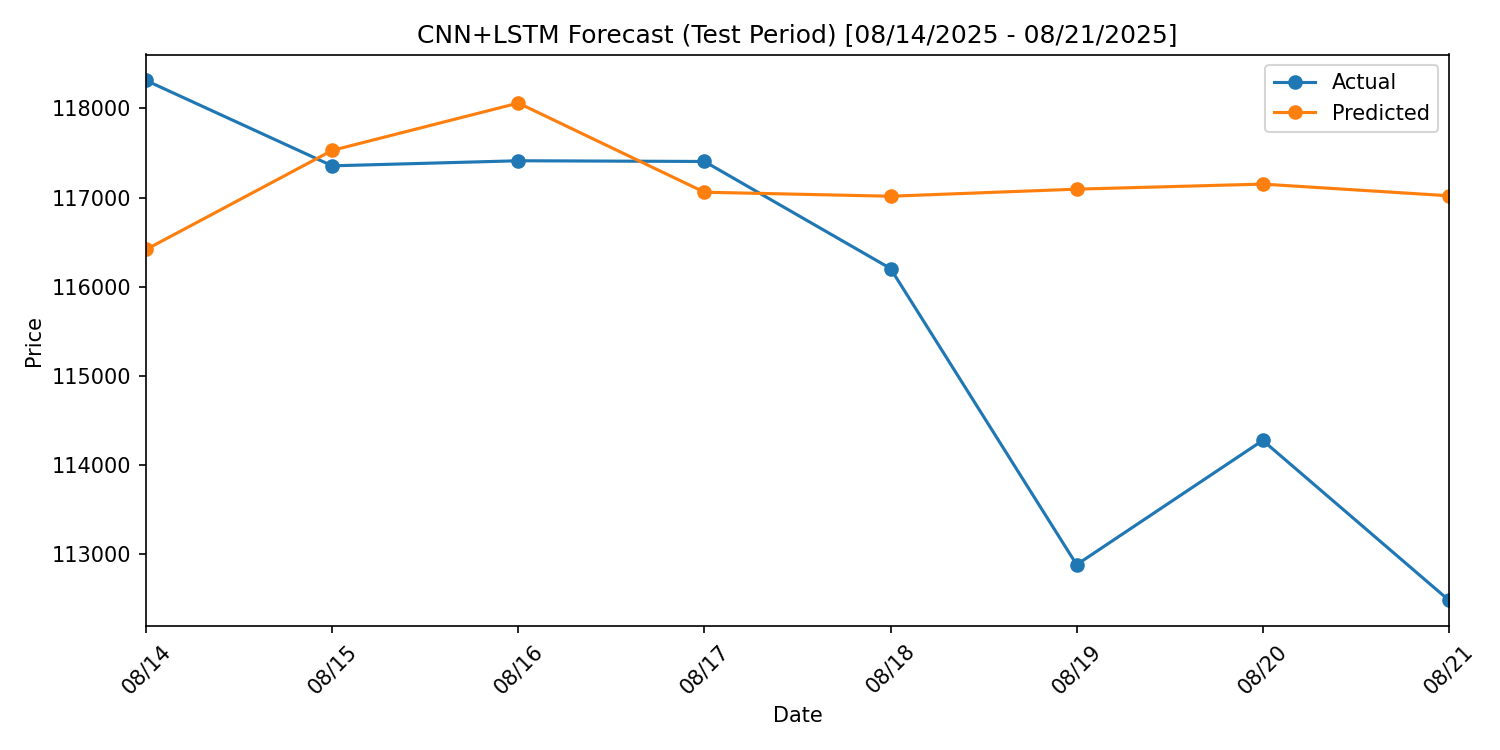

This model gave me more responsive predictions than the pure LSTM — closer to real price movements. But it still wasn’t “right.” It lagged behind sudden dips and overestimated smooth declines.

Step 4: A Quant’s Perspective

Recently, I finished The Man Who Solved the Market: How Jim Simons Launched the Quant Revolution. What struck me was the mindset: Renaissance Technologies didn’t care why their models worked — they just trusted that if the signals were strong enough, they were actionable.

Their models thrived on huge amounts of data and massive computing power, finding obscure correlations others overlooked.

But they were trading commodities and currencies, something much less volatile than cryptocurrencies.

And that brought me back to the one reason everyone tells me to stay away from crypto: it’s all too dependent on the media.

Step 5: Looking at Social Media

That’s when it clicked: Everyone warns that crypto markets are “too dependent on hype.” But what if I could actually measure that hype?

The next logical step:

-

Pull tweet volumes mentioning Bitcoin (via X/Twitter API or

snscrape). -

Align tweet counts with daily price data.

-

Explore correlations:

- Do spikes in tweet activity lead price jumps?

- Does sentiment (positive/negative) matter more than volume?

- Can tweet activity become another input feature for the CNN-LSTM model?

This idea would take the model from being price-only to being a multi-modal system: price action + social attention.

Results So Far

- Pure LSTM → Smoothed predictions, missed volatility.

- CNN-LSTM hybrid → Closer to actual daily swings, but still imperfect.

- Planned extension → Integrate social media features to capture hype cycles.

While my models don’t yet “solve Bitcoin,” each step is a lesson in how different architectures capture different aspects of a highly chaotic market.

The next frontier is connecting market data with social data, to see if hype and chatter can help predict what comes next.

Closing Thoughts

I do not have a sliver of the current computing power that major firms or hedge funds deploy. Renaissance Technologies runs its models on server farms humming with thousands of cores.

What I do have is my trusty MacBook Pro.

And while it can’t brute-force millions of simulations overnight, it lets me experiment, iterate, and learn. Every run might take a bit longer, and I might have to be creative in how I batch or simplify my models — but the core principles of quant modeling are the same, whether you’re using a laptop or a datacenter.

That’s actually one of the most exciting parts of this journey: the barrier to entry is lower than ever. With free libraries like PyTorch, pandas, and scikit-learn, I can still test strategies that echo the same structures used at billion-dollar firms.

Overall, crypto may be unpredictable — but by systematically experimenting with models, features, and data sources, I’m getting closer to uncovering the hidden structure in the noise.

This journey is part engineering, part research, and part curiosity. If nothing else, it’s given me a deeper appreciation for the challenges quants face — and maybe, just maybe, a path toward finding my own signals in the crypto storm.